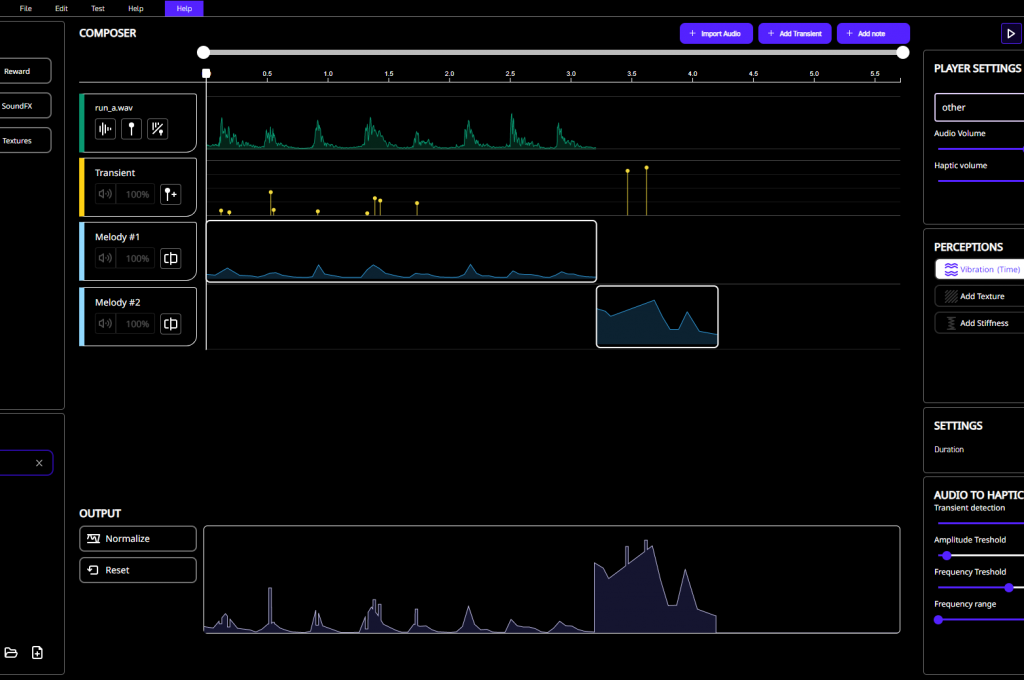

Haptics Streaming Unleashed: Interhaptics & Razer Sensa HD Haptics Transforms Immersive Experiences

Gamers love watching live streams, seeing their favorite players make incredible plays and get the wins – but what if we could take the action to the next level,