Unleash the unlimited power of gaming

with haptics

Integrate HD haptics for all platforms, all peripherals, all technologies, all in a few clicks.

Make a better game with haptics

01% 02% 03% 04% 05% 06% 07% 08% 09% 10% 11% 12% 13% 14%

MORE ENJOYABLE*

01% 02% 03% 04% 05% 06% 07% 08% 09% 10% 11% 12% 13% 14% 15% 16% 17% 18% 19%

MORE IMMERSIVE*

01% 02% 03% 04% 05% 06% 07% 08% 09% 10% 11% 12% 13% 14%

HIGHER GAME RATING**

Technology

DESIGN

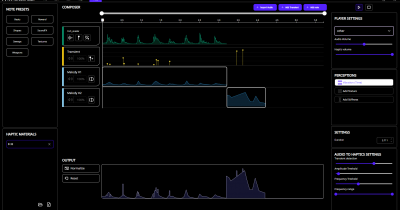

Haptic Composer

Design HD haptics with a dedicated product. Customize audio to haptics effects.

Audio to Haptics

Extract haptics features from audio tracks. Customize them with the haptic composer.

TEST

In- App Testing

Experience HD haptic effects as you create them with the Haptic Composer. Feel the haptics in real-time on the DualSense™ and Razer Kraken V3 HyperSense.

Interhaptics Player

Test and compare your designed haptic effects instantly on both iOS and Android smartphones with just one click

PLAY

Interhaptics Engine

A real-time high fidelity haptics renderer for console, Mobile, XR and PC.

Haptic SDK

A haptic SDK for Android, iOS, PlayStation®5 consoles, Xinput controllers, OpenXR and Meta Quest.

* Haptic Design: Vibrotactile Embellishments Can Improve Player Experience in Games, Singhal, Schneider, CHI 2021

** Haptics in Games, IDRAC and Florida State study in progress

“PlayStation Family Mark”, “PlayStation”, “PS5 logo”, “PS4 logo”, “DualSense” and “DUALSHOCK” are registered trademarks or trademarks of Sony Interactive Entertainment Inc.

Nintendo Switch is a trademark of Nintendo.

POWERED BY INTERHAPTICS

Discord Community

For share experiences, get help or stay tuned with the Haptic Composer & SDK's latest updates.